We thought there were quite possibly some problems with her result, but weren’t a priori sure how important a factor this might have been, so that was an extra motivation to revisit our own work.

It took a while, mostly because I was trying to incrementally improve our previous method (multivariate pattern scaling) and it took a long time to get round to realising that what I really wanted was an Ensemble Kalman Filter, which is what Tierney et al (TEA) had already used. However, they used an ensemble made by sampling internal variability of a single model (CESM1-2) and a few different sets of boundary conditions (18ka and 21ka for LGM, 0 and 3ka for the pre-industrial), whereas I’m using the PMIP meta-ensemble of PMIP2, PMIP3, and PMIP4 models.

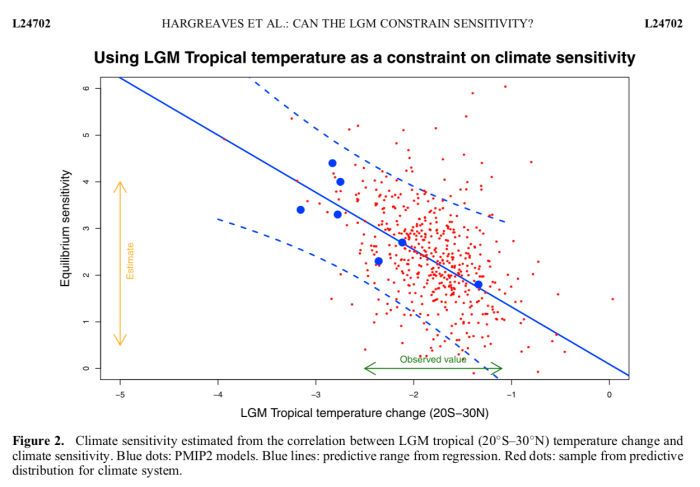

OK, being honest, that was part of the reason, the other part was general procrastination and laziness. Once I could see where it was going, tidying up the details for publication was a bit boring. But it got done, and the paper is currently in review at CPD. Our new headline result is -4.5±1.7C, so slightly colder and much more uncertain than our previous result, but nowhere near as cold as TEA.

I submitted an abstract for the EGU meeting which is on again right now. It’s fully blended in-person and on-line now, which is a fabulous step forwards that I’ve been agitating for from the sidelines for a while. They used to say it was impossible, but covid forced their hand somewhat with two years of virtual meetings, and now they have worked out how to blend it. A few teething niggles but it’s working pretty well, at least for us as virtual attendees. Talks are very short so rather than go over the whole reconstruction again (I’ve presented early versions previously) I focussed just on one question: why is our result so different from Tierney et al? While I hadn’t set out specifically to critique that work, the reviewers seemed keen to explore, so I’ve recently done a bit more digging into our result. My presentation can be found via this link, I think.

One might assume a major reason might be that the new TEA proxy data set was substantially colder than what went before, but we didn’t find that to be the case. In fact many of the gridded data points coincide physically with the MARGO SST data set which we had previously used, and the average value over these locations was only 0.3C colder in TEA than MARGO (though there was a substantial RMS difference between the points, which is interesting in itself as it suggests that these temperature estimates may still be rather uncertain). A modest cooling of 0.3 in the mean for these SST points might be expected to translate to about 0.5 or so for surface air temperature globally, not close to to the 2.1C difference seen between our 2013 result and their 2020 paper. Also, our results are very similar when we switch between using MARGO and TEA and both together. So, we don’t believe the new TEA data are substantially different from what went before.

What is really different between TEA and our new work is the priors we used.

Here is a figure summarising our main analysis, which follows the Ensemble Kalman Filter approach, which means we have a prior ensemble of model simulations (lower blue dots, summarised in the blue gaussian curve above) each of which is updated by nudging towards observations, generating the posterior ensemble of upper red dots and red curve. I’ve highlighted one model in green, which is CESM1-2. Under this plot I have pasted bits of a figure from Tierney et al which shows their prior and posterior 95% ranges. I lined up the scales carefully. You can see that the middle of their ensembles, which are entirely based on CESM1-2, are really quite close to what we get with the CESM1-2 model (the big dots in their ranges are the median of their distributions, which obviously aren’t quite gaussian). Their calculation isn’t identical to what we get with CESM1-2, because it’s a different model simulation, with different forcing, we are using different data and there are various other differences in the details of our calculation. But it’s close.

Here is a terrible animated gif. It isn’t that fuzzy in the full presentation. What it shows is the latitudinal temperatures (anomalies relative to pre-industrial) of our posterior ensemble of reconstructions (thin black lines, thick line showing the mean), with the CESM-derived member highlighted in green, and Tierney et al’s mean estimate added in purple. The structural similarity between those two lines is striking.

A simple calculation also shows that the global temperature field of our CESM-derived sample is closer to their mean in the RMS difference sense, than any other of our ensemble members. Clearly, there’s a strong imprint of the underlying model even after the nudge towards the data sets.

So, this is why we think their result is largely down to their choice of prior. While we have a solution that looks like their mean estimate, this lies close to the edge of our range. The reason they don’t have any solutions that look like the bulk of our results is simply that they excluded them a priori. It’s nothing to do with their new data or their analysis method.

We’ve been warning against the use of single model ensembles to represent uncertainty in climate change for a full decade now, it’s disappointing that the message doesn’t seem to have got through.